Overview

People

University of Edinburgh (UoE) | Principal Investigator: Prof. Sethu Vijayakumar Group Leader: Dr. Songyan Xin, Dr. Lei Yan

|

Shenzhen Institute of Artificial Intelligence and Robotics for Society (AIRS) | Principal Investigator: Assistant Prof. LAM, Tin Lun Group Leader: Dr. Tianwei Zhang |

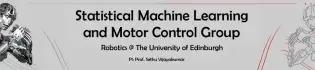

Three Scientific Pillars

- Multi-Contact Planning and Control

- Multi-Agent Collaborative Manipulation

- Robot Perception

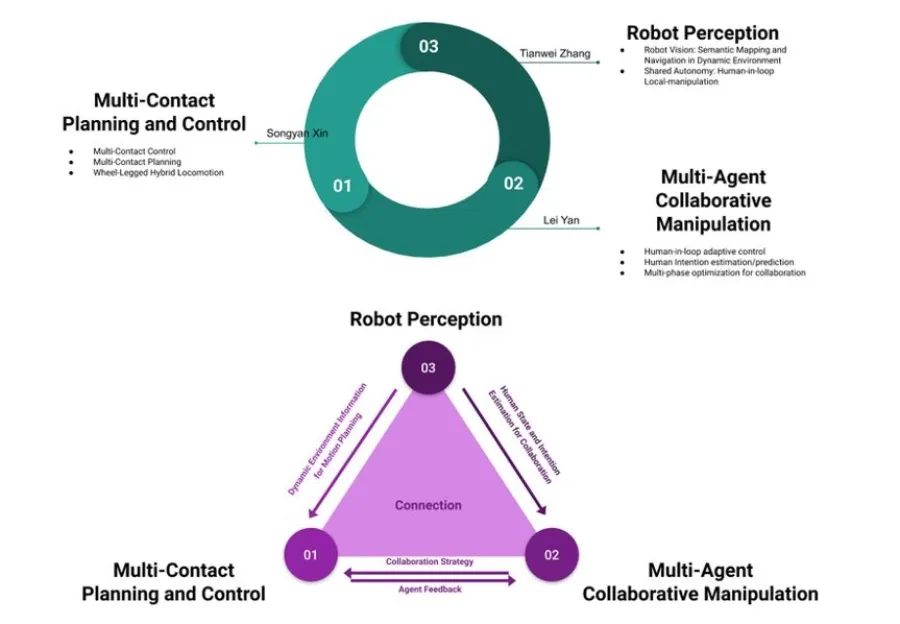

Multi-Contact Planning and Control

The basic requirement for a robot is the ability to locomote and manipulate. Locomotion and manipulation in cluttered spaces result in extra contacts between several parts of the robot and the environment. These contacts can be exploited to enhance both locomotion and manipulation capabilities. Multi-contact planning extends beyond foot step planning and balance control. If we define locomotion as a problem of moving the body of a robot and manipulation as moving external objects, they can both be achieved through contact planning and control between the robot and the environment.

- The discrete choice of the sequence of contact combinations

- The continuous choice of contact locations and timing

- The continuous choice of a path between two contact combinations (transition)

The goal of the research is a general framework that can handle all scenarios by formulating contacts as geometric constraints and contact force variables in a general numerical optimization problem.

Platform & System

Talos is an advanced humanoid designed to perform complex tasks in research and industrial settings. It uses torque control to move its limbs and can walk on uneven terrain, sense its environment, and operate power tools.

- Torque sensors in all joints.

- Fully electrical.

- Capable of lifting 6-kilogram payloads per arm.

- Full EtherCAT communications.

- ROS-enabled.

- Dynamic walking, including on uneven ground and stairs.

- Can complete tasks such as drilling or screwing. Human-robot interaction skills.

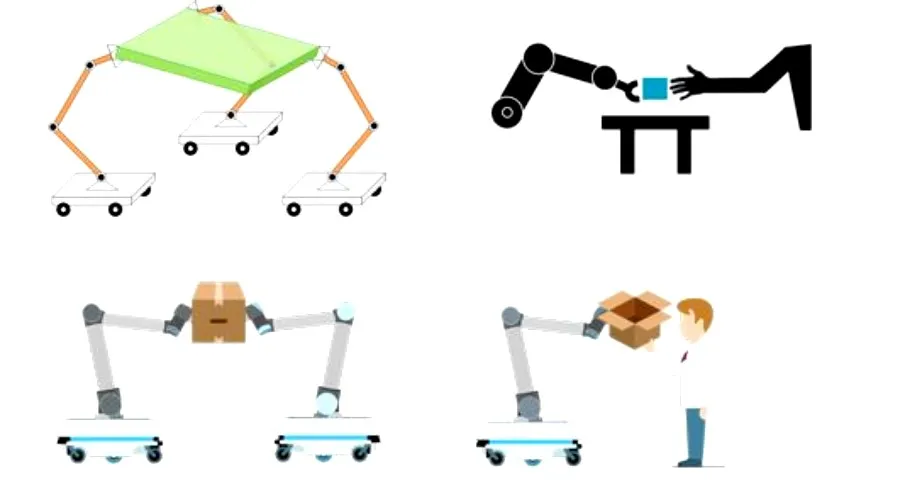

Multi-Agent Collaborative Manipulation

- Multiple phase/contact optimization for large momentum object manipulation

- Autonomous task allocation and distributed control for multi-agent collaboration

- Policy learning and adaptive control for efficient human-robot collaboration

Platform & System

- LBR iiwa Robot Manipulator

LBR iiwa is a 7-axis robot arm designed for safe human-robot collaboration in the workspace. It has joint-torque sensors in all axes to move precisely and detect contact with humans and objects.

- KUKA Mobile Platform

Omnidirectional, mobile platform that navigates autonomously and flexibly. Combined with the latest KUKA Sunrise controller, it provides modular, versatile and above all mobile production concepts for the industry of the future.

- Shadow Dexterous Hand

With 20 actuated degrees of freedom, absolute position and force sensors, and ultra sensitive touch sensors on the fingertips, the hand provides unique capabilities for problems that require the closest approximation of the human hand currently possible.

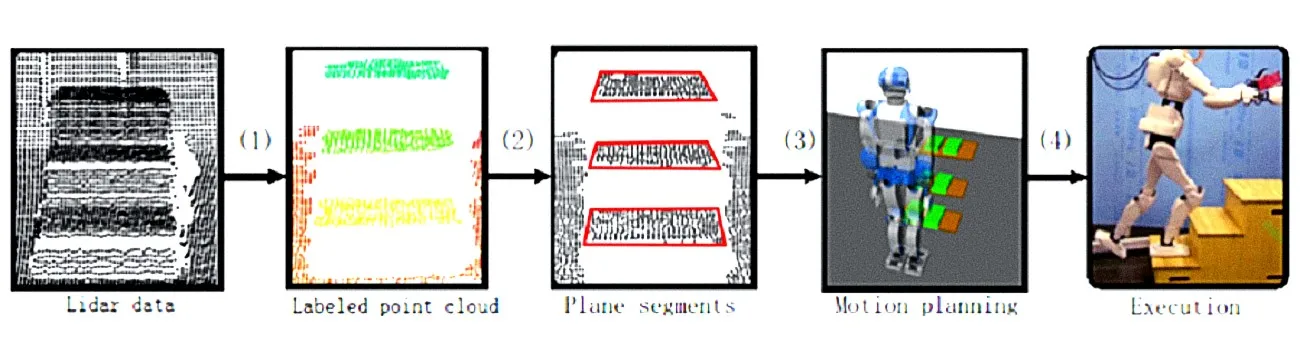

Robot Perception

- State Estimation from Multi-Modal Information

- Vision-Based Tracking in Dynamic Environments

Research Topics

- How to find and deal with the Dynamic object using LIDAR or RGB camera in robot working space?

- How to build a robot understandable map using camera or LIDAR point clouds?

- How to fuse different sensing data (camera, LIDAR, IMU and T/F sensors) for robust robot state estimation?

Platform & System

- LIDAR

- Kinect

- RealSense